Reducing latency on multicore pc's - Success!

- BigJohnT

-

- Offline

- Administrator

-

- Posts: 3990

- Thank you received: 994

John

Please Log in or Create an account to join the conversation.

- ArcEye

- Offline

- Junior Member

-

- Posts: 22

- Thank you received: 240

Regards multicore latency reduction - just done some quick tests and I am not able to reproduce the results.

I have a quad core machine in the house which brings in a steady 31179 max jitter on base thread as is, with stock 10.04 and 2.6.32-132-rtai kernel

Test with 4 instances of glxgears and opening Firefox and browsing.

With isolcpus=1,2,3 acpi_irq_nobalance noirqbalance on the boot line, irqbalance uninstalled, the irqbalance.conf script removed and your script inserted

max jitter 31218 with exact same loading for same time.

It runs like crap though on just 1 core.

I proved the other 3 cores had been isolated from mpstat -P ALL

Linux 2.6.32-122-rtai (INTEL-QUAD) 13/12/12 _i686_ (4 CPU)

17:36:35 CPU %usr %nice %sys %iowait %irq %soft %steal %guest %idle

17:36:35 all 0.16 0.00 14.27 1.30 0.05 0.02 0.00 0.00 84.20

17:36:35 0 0.65 0.00 58.86 5.38 0.19 0.10 0.00 0.00 34.83

17:36:35 1 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 99.99

17:36:35 2 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 100.00

17:36:35 3 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 100.00and the IRQs have been moved to CPU 0 ( the low figures for 1,2,3 are constant throughout and presumably are those that occurred before the script was run)

CPU0 CPU1 CPU2 CPU3

0: 50 0 0 0 IO-APIC-edge timer

1: 3 1 0 1 IO-APIC-edge i8042

8: 0 0 0 0 IO-APIC-edge rtc0

9: 0 0 0 0 IO-APIC-fasteoi acpi

12: 2 2 1 1 IO-APIC-edge i8042

16: 1847 28 20 27 IO-APIC-fasteoi uhci_hcd:usb3

17: 19 0 0 0 IO-APIC-fasteoi

18: 266 20 20 11 IO-APIC-fasteoi ehci_hcd:usb1, uhci_hcd:usb5, uhci_hcd:usb8

19: 16354 560 566 562 IO-APIC-fasteoi ata_piix, ata_piix, uhci_hcd:usb7

21: 0 0 0 0 IO-APIC-fasteoi uhci_hcd:usb4

22: 245 1 0 2 IO-APIC-fasteoi ohci1394, HDA Intel

23: 111 27 29 29 IO-APIC-fasteoi ehci_hcd:usb2, uhci_hcd:usb6

24: 481 0 0 0 PCI-MSI-edge eth0

25: 377712 1 0 1 PCI-MSI-edge i915

NMI: 0 0 0 0 Non-maskable interrupts

LOC: 117573 93136 4044 1135 Local timer interrupts

SPU: 0 0 0 0 Spurious interrupts

PMI: 0 0 0 0 Performance monitoring interrupts

PND: 0 0 0 0 Performance pending work

RES: 551 19010 313 261 Rescheduling interrupts

CAL: 1110 439 437 435 Function call interrupts

TLB: 0 0 0 0 TLB shootdowns

TRM: 0 0 0 0 Thermal event interrupts

THR: 0 0 0 0 Threshold APIC interrupts

MCE: 0 0 0 0 Machine check exceptions

MCP: 2 2 2 2 Machine check polls

ERR: 3

MIS: 0I have some test kernels I compiled a while back testing the effects of various kernel options, I think the best of these got the latency max jitter down to 15K on the same machine

(albeit that ran like crap too with 3 cores turned off)

Shame but those are the figures I got.

regards

Please Log in or Create an account to join the conversation.

- awallin

- Offline

- New Member

-

- Posts: 15

- Thank you received: 1

www.anderswallin.net/2012/12/real-time-tuning/

can you post the output of your watchirqs?

Any ideas why I am not seeing improvements?

Anders

Please Log in or Create an account to join the conversation.

- ArcEye

- Offline

- Junior Member

-

- Posts: 22

- Thank you received: 240

CPU

- disable Vanderpool Technology (cpu vitualization stuff for vm's)

- disable C1E support

I don't actually have them in my bios, although I have disabled similar functions regards power management, temperature control.

I think virtualisation is turned off in the kernel in the rtai .config anyway

Please Log in or Create an account to join the conversation.

- RGH

- Offline

- Junior Member

-

- Posts: 35

- Thank you received: 13

The low latency values I get are NOT because I changed something in the BIOS by accident like C1E or something!!! That's not me. Maybe I should elaborate a bit more on my testing and optimization process. I spend days with it running different old machines I had collecting dust and looking for the best one for linuxcnc.

Initial test to get a picture was with bios default settings which gave me a regular jitter of around 15000ns with peaks of 30000+ (no isolcpus). Next was changing all obvious values in the bios like c1e, SpeedStep ect. and suspicious values like hardware monitoring, temperature regulation of the cpu fan and others and setting isolcpus. After further tests, re-enabling ACPI 2.0 and fixing the sleep mode to S3 (instead of AUTO) resulted in <4000ns jitter nearly all the time with irregular peaks of up to 10000ns that happened during really heavy testing for the period of an hour. The next step was changing back "not so obvious" values in the bios to see which had an influence and which not (keeping all cpu settings but TM and disable bit off!), trying other vga-cards and more and more tests that revealed that reserving irqs in the pnp configuration changed the rate at which the 10000ns peaks happened. That lead me to the irq stuff. Observing the cpu usage showed that the second cpu irregularly jumped to 2% load although it was isolated. Watching the irq handling revealed that the second cpu was doing a lot of the handling, changed that with the attached scripts and the peak 10000ns values were gone! Changing the affinity mask to F still shows the peak ~10000ns values after running the machine under heavy load for a longer period of time.

So NO! This is not wishful thinking but a measure with reproducible effects on two core2duo machines!

Actually I think that it is quiet obvious that unloading the cpu on which the realtime stuff happens CAN have an impact. At least if the max jitter on a machine happens while handling an interrupt request and is not induced by something else.

Cheers,

Ruben

PS: In the meantime I installed the software opengl drivers in order to be able to start linuxcnc in a remote NX session. I will have to do dedicated testing to see what is going on but when using the remote session (normal "administration work", browsing and doing this and that) the jitter is even lower now at around 1800ns

Please Log in or Create an account to join the conversation.

- RGH

- Offline

- Junior Member

-

- Posts: 35

- Thank you received: 13

Please Log in or Create an account to join the conversation.

- awallin

- Offline

- New Member

-

- Posts: 15

- Thank you received: 1

@Anders

I will make a screenshot and post it.

How do you create that histograms? Are the jitter values logged and I don't even know?

The histogram is a recent hack:

www.anderswallin.net/2012/12/latency-histogram/

creating the graph is a manual process for now:

- run the appropriate file with halcmd -f

- stop it with halcmd stop after a while (e.g. output file is 1M in size)

- plot with the python script

If there was an easy programmatic way to access the sampler/halsampler FIFO then it would be straightforward to plot a "live" latency-histogram. I may be inspired to look into this at some point.

AW

Please Log in or Create an account to join the conversation.

- ArcEye

- Offline

- Junior Member

-

- Posts: 22

- Thank you received: 240

This is not wishful thinking but a measure with reproducible effects on two core2duo machines!

Don't forget to put the data about the machines into the latency database.

wiki.linuxcnc.org/cgi-bin/wiki.pl?Latency-Test

3600 max jitter is a pipe dream for most people, they will want to know precisely which boards you tested

regards

Please Log in or Create an account to join the conversation.

- tensor

- Offline

- New Member

-

- Posts: 1

- Thank you received: 0

I started with a pretty constant max jitter of 40000.

First tweak was an overall reconfiguration of the bios according to Spainman's first post. In order to be able to change the Vanderpool Technology (to disable it) I had to start the PC into BIOS after a cold start. If I go into BIOS after a reboot, the option is not available.

Next I applied the interrupt reassignment with the very handy tools provided by Spainman.

After these changes, the longterm jitter was between 500 and 3500. However, starting glxgears caused peaks up to 70000. So I have replaced the NVidia video card by an ATI and now the jitter is always below 3500.

The final hardware setup is:

Mainboard: ASUS P5B deluxe

Processor: Core2Duo E6600 2x2.4GHz

RAM: 4 GB Corsair PC2-800 CL4

Video: Radeon X300, 128MB

HDD: 80GB Samsung IDE

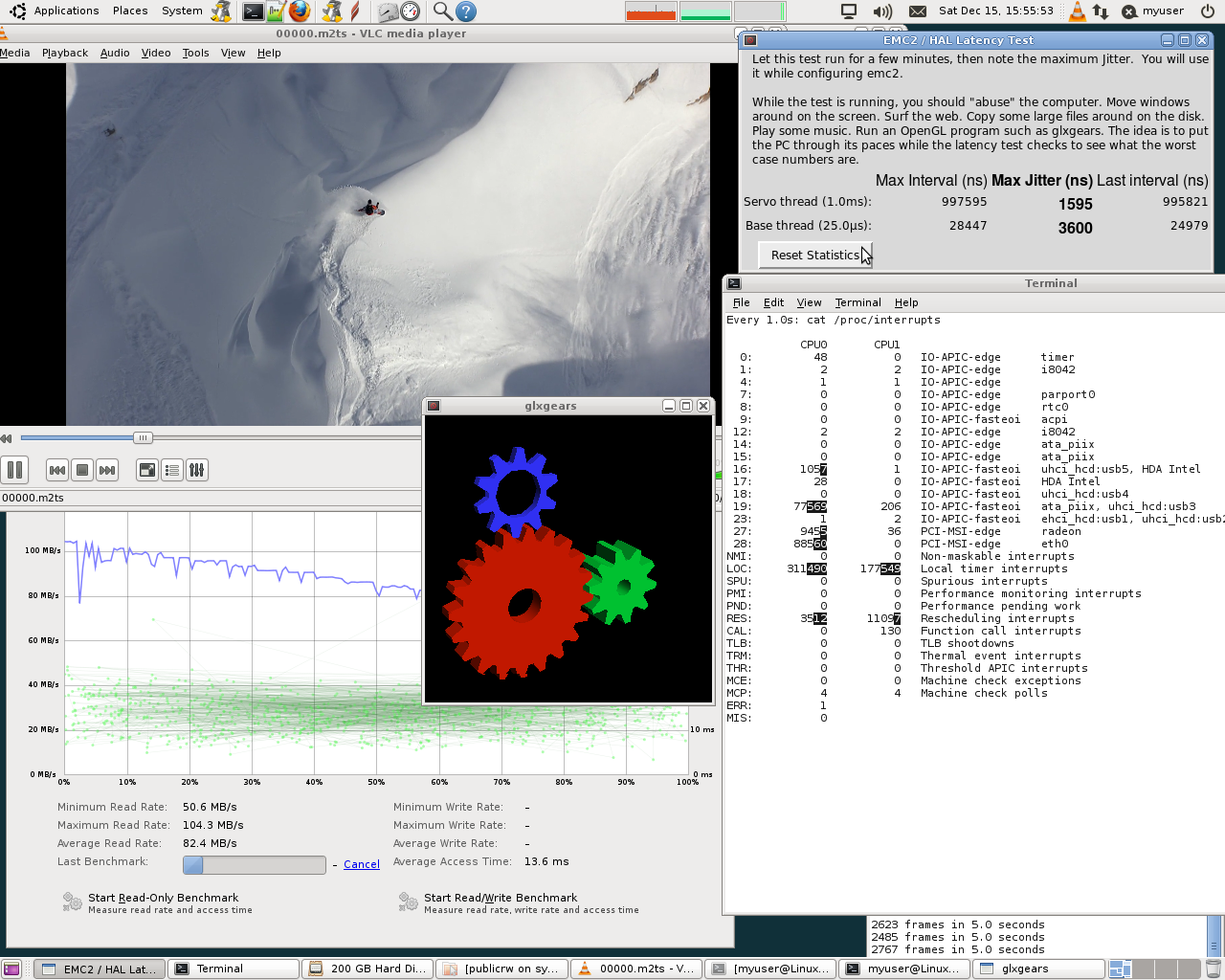

-> 3500 max Jitter with 5 glxgears and FullHD video playback (which shows only a few frames every 10 seconds) mesured for 1 hour.

cheers,

stefan

Please Log in or Create an account to join the conversation.

- green751

-

- Visitor

-

I bought and have installed a Gigabyte GA-E350N motherboard with 4 GB DDR1600 RAM. Storage is a 64 GB SSD. It boots an install from the latest live CD in about 20 seconds. For those that don't know, this is a mini itx low power PC with a dual core AMD cpu running at 1.6Ghz. The CPU includes an integrated Radeon GPU, although I am not using it at the moment because loading the radeon drivers seemed to greatly increase latency in previous testing.

I tried various configurations in the bios and grub to improve latency test scores. Oddly, it seemed to give better scores when I did *not* use the ISOLCPUs parameter, giving me stable 10000/4000 numbers with both CPUs enabled but all the other BIOS switches set as described here.

I then decided to try the scripts here and check latency, including running the ISOLCPUS parameter. So far it's running numbers of about 4000/1200 for latency running two copies of glxgears and browsing with a copy of firefox... a significant improvement if correct (this is, after all, a benchmark instead of real work).

Just another data point for you. I'll post later results after I run it in for 24 hours. I might try changing the base thread period to match the servo, and change the servo time to something like 125us, to simulate running an 8khz servo thread. If anyone can think of latency test settings to try to verify a servo thread that fast will really work, I'd appreciate it.

This computer is a replacement for my mill control PC, so I'll be installing a Mesa 5i20 and servo card for use, IE no software stepping... although the reason I want low latency is that I'd like to try doing software commutation on some brushless servos.

Erik

Please Log in or Create an account to join the conversation.