Reducing latency on multicore pc's - Success!

- PCW

-

- Away

- Moderator

-

Less

More

- Posts: 17724

- Thank you received: 5186

11 Dec 2012 01:10 #27524

by PCW

Replied by PCW on topic Reducing latency on multicore pc's - Success!

Right

bit 0 and 1 is 3 so 0 _and_ 1 result in a 1 output

as does bit 2 (4)so only input codes of 3 (00011b) and 4 (00100) generate a '1' output

all other codes generate a 0

bit 0 and 1 is 3 so 0 _and_ 1 result in a 1 output

as does bit 2 (4)so only input codes of 3 (00011b) and 4 (00100) generate a '1' output

all other codes generate a 0

Please Log in or Create an account to join the conversation.

- awallin

- Offline

- New Member

-

Less

More

- Posts: 15

- Thank you received: 1

12 Dec 2012 02:07 #27596

by awallin

Replied by awallin on topic Reducing latency on multicore pc's - Success!

Hi Spainman,

I would be interested in trying your real-time tuning tips.

Can you try to post your attachment again? or maybe use pastebin or make a wiki page about it.

I assume you were trying this with Ubuntu 10.04lts and the RTAI kernel?

Anders

I would be interested in trying your real-time tuning tips.

Can you try to post your attachment again? or maybe use pastebin or make a wiki page about it.

I assume you were trying this with Ubuntu 10.04lts and the RTAI kernel?

Anders

Please Log in or Create an account to join the conversation.

- ArcEye

- Offline

- Junior Member

-

Less

More

- Posts: 22

- Thank you received: 240

13 Dec 2012 20:45 #27664

by ArcEye

Ditto again,

Having read up on the subject I can see what you are doing and probably replicate it.

What confuses me is the use of isolcpus as well.

If the kernel option CONFIG_HOTPLUG_CPU is set and allows the 'unmounting' of CPUs, with isolcpus (1,2,3) on a quad core for instance, where else but core 0 can the interrupts be sent?

Are you saying that even with the other cores isolated, irq_balance and APIC are still using the other cores for interrupts?

I shall have to have a play with it.

I can show that for instance using echo "1" > /proc/irq/NN/smp_affinity will move most of the interrupts on IRQ NN to CPU 0.

Mapping all my IRQs by this method to CPU 0, which I assume is what your script does at start up, showed a corresponding reduction in use of the other CPUs (albeit not a complete cessation in use)

(This is without isolcpus on the bootline)

I have various development kernels on this machine, so can try a few different things when time allows, but would be nice to get a bit more detail on your findings that led you here

regards

Replied by ArcEye on topic Reducing latency on multicore pc's - Success!

Hi Spainman,

I would be interested in trying your real-time tuning tips.

Can you try to post your attachment again? or maybe use pastebin or make a wiki page about it.

I assume you were trying this with Ubuntu 10.04lts and the RTAI kernel?

Anders

Ditto again,

Having read up on the subject I can see what you are doing and probably replicate it.

What confuses me is the use of isolcpus as well.

If the kernel option CONFIG_HOTPLUG_CPU is set and allows the 'unmounting' of CPUs, with isolcpus (1,2,3) on a quad core for instance, where else but core 0 can the interrupts be sent?

Are you saying that even with the other cores isolated, irq_balance and APIC are still using the other cores for interrupts?

I shall have to have a play with it.

I can show that for instance using echo "1" > /proc/irq/NN/smp_affinity will move most of the interrupts on IRQ NN to CPU 0.

Mapping all my IRQs by this method to CPU 0, which I assume is what your script does at start up, showed a corresponding reduction in use of the other CPUs (albeit not a complete cessation in use)

(This is without isolcpus on the bootline)

I have various development kernels on this machine, so can try a few different things when time allows, but would be nice to get a bit more detail on your findings that led you here

regards

Please Log in or Create an account to join the conversation.

- RGH

- Offline

- Junior Member

-

Less

More

- Posts: 35

- Thank you received: 13

14 Dec 2012 00:54 #27692

by RGH

Replied by RGH on topic Reducing latency on multicore pc's - Success!

Sorry... I was away for a few days and couldn't respond. The initial post is updated now and contains a zip.

Regarding the questions:

- I've installed the machine from the latest linuxCnc Iso so yes, it's Ubuntu 10.04lts.

- isolcpus alone does definately not prevent irq-handling on the isolated core (at least on my machine)

- echoing into the smp_affinity files is what I do, it did not work without the kernel options I mentioned on my first post

The lut5 function does not seem to be that simple ;-D as the discussion shows. Will have to read the posts in detail as soon as I have a little bit more sparetime. Thanks for the information anyway - I'm sure it will help to figure out what it does exactly.

Cheers,

Ruben

Regarding the questions:

- I've installed the machine from the latest linuxCnc Iso so yes, it's Ubuntu 10.04lts.

- isolcpus alone does definately not prevent irq-handling on the isolated core (at least on my machine)

- echoing into the smp_affinity files is what I do, it did not work without the kernel options I mentioned on my first post

The lut5 function does not seem to be that simple ;-D as the discussion shows. Will have to read the posts in detail as soon as I have a little bit more sparetime. Thanks for the information anyway - I'm sure it will help to figure out what it does exactly.

Cheers,

Ruben

Please Log in or Create an account to join the conversation.

- BigJohnT

-

- Offline

- Administrator

-

Less

More

- Posts: 3990

- Thank you received: 994

14 Dec 2012 01:36 #27696

by BigJohnT

Replied by BigJohnT on topic Reducing latency on multicore pc's - Success!

I've just added a description of

lut5

to the manual that might be a bit easier to read than the man page.

John

John

Please Log in or Create an account to join the conversation.

- ArcEye

- Offline

- Junior Member

-

Less

More

- Posts: 22

- Thank you received: 240

14 Dec 2012 01:58 - 15 Dec 2012 00:31 #27697

by ArcEye

Replied by ArcEye on topic Reducing latency on multicore pc's - Success!

Hi

Regards multicore latency reduction - just done some quick tests and I am not able to reproduce the results.

I have a quad core machine in the house which brings in a steady 31179 max jitter on base thread as is, with stock 10.04 and 2.6.32-132-rtai kernel

Test with 4 instances of glxgears and opening Firefox and browsing.

With isolcpus=1,2,3 acpi_irq_nobalance noirqbalance on the boot line, irqbalance uninstalled, the irqbalance.conf script removed and your script inserted

max jitter 31218 with exact same loading for same time.

It runs like crap though on just 1 core.

I proved the other 3 cores had been isolated from mpstat -P ALL

and the IRQs have been moved to CPU 0 ( the low figures for 1,2,3 are constant throughout and presumably are those that occurred before the script was run)

I have some test kernels I compiled a while back testing the effects of various kernel options, I think the best of these got the latency max jitter down to 15K on the same machine

(albeit that ran like crap too with 3 cores turned off)

Shame but those are the figures I got.

regards

Regards multicore latency reduction - just done some quick tests and I am not able to reproduce the results.

I have a quad core machine in the house which brings in a steady 31179 max jitter on base thread as is, with stock 10.04 and 2.6.32-132-rtai kernel

Test with 4 instances of glxgears and opening Firefox and browsing.

With isolcpus=1,2,3 acpi_irq_nobalance noirqbalance on the boot line, irqbalance uninstalled, the irqbalance.conf script removed and your script inserted

max jitter 31218 with exact same loading for same time.

It runs like crap though on just 1 core.

I proved the other 3 cores had been isolated from mpstat -P ALL

Linux 2.6.32-122-rtai (INTEL-QUAD) 13/12/12 _i686_ (4 CPU)

17:36:35 CPU %usr %nice %sys %iowait %irq %soft %steal %guest %idle

17:36:35 all 0.16 0.00 14.27 1.30 0.05 0.02 0.00 0.00 84.20

17:36:35 0 0.65 0.00 58.86 5.38 0.19 0.10 0.00 0.00 34.83

17:36:35 1 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 99.99

17:36:35 2 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 100.00

17:36:35 3 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 100.00and the IRQs have been moved to CPU 0 ( the low figures for 1,2,3 are constant throughout and presumably are those that occurred before the script was run)

CPU0 CPU1 CPU2 CPU3

0: 50 0 0 0 IO-APIC-edge timer

1: 3 1 0 1 IO-APIC-edge i8042

8: 0 0 0 0 IO-APIC-edge rtc0

9: 0 0 0 0 IO-APIC-fasteoi acpi

12: 2 2 1 1 IO-APIC-edge i8042

16: 1847 28 20 27 IO-APIC-fasteoi uhci_hcd:usb3

17: 19 0 0 0 IO-APIC-fasteoi

18: 266 20 20 11 IO-APIC-fasteoi ehci_hcd:usb1, uhci_hcd:usb5, uhci_hcd:usb8

19: 16354 560 566 562 IO-APIC-fasteoi ata_piix, ata_piix, uhci_hcd:usb7

21: 0 0 0 0 IO-APIC-fasteoi uhci_hcd:usb4

22: 245 1 0 2 IO-APIC-fasteoi ohci1394, HDA Intel

23: 111 27 29 29 IO-APIC-fasteoi ehci_hcd:usb2, uhci_hcd:usb6

24: 481 0 0 0 PCI-MSI-edge eth0

25: 377712 1 0 1 PCI-MSI-edge i915

NMI: 0 0 0 0 Non-maskable interrupts

LOC: 117573 93136 4044 1135 Local timer interrupts

SPU: 0 0 0 0 Spurious interrupts

PMI: 0 0 0 0 Performance monitoring interrupts

PND: 0 0 0 0 Performance pending work

RES: 551 19010 313 261 Rescheduling interrupts

CAL: 1110 439 437 435 Function call interrupts

TLB: 0 0 0 0 TLB shootdowns

TRM: 0 0 0 0 Thermal event interrupts

THR: 0 0 0 0 Threshold APIC interrupts

MCE: 0 0 0 0 Machine check exceptions

MCP: 2 2 2 2 Machine check polls

ERR: 3

MIS: 0I have some test kernels I compiled a while back testing the effects of various kernel options, I think the best of these got the latency max jitter down to 15K on the same machine

(albeit that ran like crap too with 3 cores turned off)

Shame but those are the figures I got.

regards

Last edit: 15 Dec 2012 00:31 by ArcEye.

Please Log in or Create an account to join the conversation.

- awallin

- Offline

- New Member

-

Less

More

- Posts: 15

- Thank you received: 1

15 Dec 2012 02:54 #27737

by awallin

Replied by awallin on topic Reducing latency on multicore pc's - Success!

I have now tried this without seeing much changes to the jitter histogram:

www.anderswallin.net/2012/12/real-time-tuning/

can you post the output of your watchirqs?

Any ideas why I am not seeing improvements?

Anders

www.anderswallin.net/2012/12/real-time-tuning/

can you post the output of your watchirqs?

Any ideas why I am not seeing improvements?

Anders

Please Log in or Create an account to join the conversation.

- ArcEye

- Offline

- Junior Member

-

Less

More

- Posts: 22

- Thank you received: 240

15 Dec 2012 18:01 - 15 Dec 2012 18:01 #27759

by ArcEye

Replied by ArcEye on topic Reducing latency on multicore pc's - Success!

I am wondering if the improvement on Spainmans machine actually came from these?

I don't actually have them in my bios, although I have disabled similar functions regards power management, temperature control.

I think virtualisation is turned off in the kernel in the rtai .config anyway

CPU

- disable Vanderpool Technology (cpu vitualization stuff for vm's)

- disable C1E support

I don't actually have them in my bios, although I have disabled similar functions regards power management, temperature control.

I think virtualisation is turned off in the kernel in the rtai .config anyway

Last edit: 15 Dec 2012 18:01 by ArcEye.

Please Log in or Create an account to join the conversation.

- RGH

- Offline

- Junior Member

-

Less

More

- Posts: 35

- Thank you received: 13

15 Dec 2012 22:27 #27768

by RGH

Replied by RGH on topic Reducing latency on multicore pc's - Success!

Okay - I never said that this would magically solve all high latency problems or something. That it does not change anything on your machine just means that the latency was not induced by the handling of an IRQ, right?

The low latency values I get are NOT because I changed something in the BIOS by accident like C1E or something!!! That's not me. Maybe I should elaborate a bit more on my testing and optimization process. I spend days with it running different old machines I had collecting dust and looking for the best one for linuxcnc.

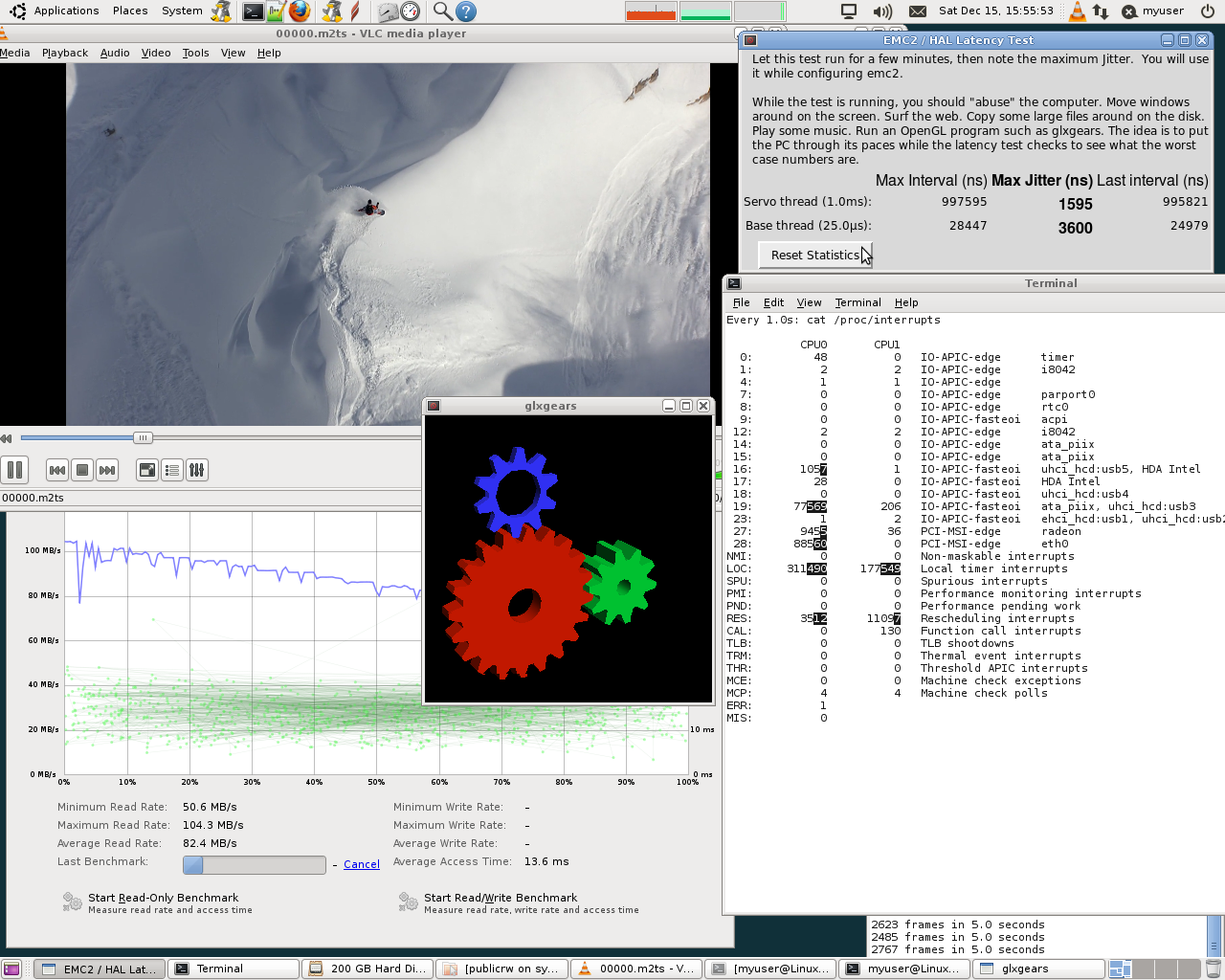

Initial test to get a picture was with bios default settings which gave me a regular jitter of around 15000ns with peaks of 30000+ (no isolcpus). Next was changing all obvious values in the bios like c1e, SpeedStep ect. and suspicious values like hardware monitoring, temperature regulation of the cpu fan and others and setting isolcpus. After further tests, re-enabling ACPI 2.0 and fixing the sleep mode to S3 (instead of AUTO) resulted in <4000ns jitter nearly all the time with irregular peaks of up to 10000ns that happened during really heavy testing for the period of an hour. The next step was changing back "not so obvious" values in the bios to see which had an influence and which not (keeping all cpu settings but TM and disable bit off!), trying other vga-cards and more and more tests that revealed that reserving irqs in the pnp configuration changed the rate at which the 10000ns peaks happened. That lead me to the irq stuff. Observing the cpu usage showed that the second cpu irregularly jumped to 2% load although it was isolated. Watching the irq handling revealed that the second cpu was doing a lot of the handling, changed that with the attached scripts and the peak 10000ns values were gone! Changing the affinity mask to F still shows the peak ~10000ns values after running the machine under heavy load for a longer period of time.

So NO! This is not wishful thinking but a measure with reproducible effects on two core2duo machines!

Actually I think that it is quiet obvious that unloading the cpu on which the realtime stuff happens CAN have an impact. At least if the max jitter on a machine happens while handling an interrupt request and is not induced by something else.

Cheers,

Ruben

PS: In the meantime I installed the software opengl drivers in order to be able to start linuxcnc in a remote NX session. I will have to do dedicated testing to see what is going on but when using the remote session (normal "administration work", browsing and doing this and that) the jitter is even lower now at around 1800ns

The low latency values I get are NOT because I changed something in the BIOS by accident like C1E or something!!! That's not me. Maybe I should elaborate a bit more on my testing and optimization process. I spend days with it running different old machines I had collecting dust and looking for the best one for linuxcnc.

Initial test to get a picture was with bios default settings which gave me a regular jitter of around 15000ns with peaks of 30000+ (no isolcpus). Next was changing all obvious values in the bios like c1e, SpeedStep ect. and suspicious values like hardware monitoring, temperature regulation of the cpu fan and others and setting isolcpus. After further tests, re-enabling ACPI 2.0 and fixing the sleep mode to S3 (instead of AUTO) resulted in <4000ns jitter nearly all the time with irregular peaks of up to 10000ns that happened during really heavy testing for the period of an hour. The next step was changing back "not so obvious" values in the bios to see which had an influence and which not (keeping all cpu settings but TM and disable bit off!), trying other vga-cards and more and more tests that revealed that reserving irqs in the pnp configuration changed the rate at which the 10000ns peaks happened. That lead me to the irq stuff. Observing the cpu usage showed that the second cpu irregularly jumped to 2% load although it was isolated. Watching the irq handling revealed that the second cpu was doing a lot of the handling, changed that with the attached scripts and the peak 10000ns values were gone! Changing the affinity mask to F still shows the peak ~10000ns values after running the machine under heavy load for a longer period of time.

So NO! This is not wishful thinking but a measure with reproducible effects on two core2duo machines!

Actually I think that it is quiet obvious that unloading the cpu on which the realtime stuff happens CAN have an impact. At least if the max jitter on a machine happens while handling an interrupt request and is not induced by something else.

Cheers,

Ruben

PS: In the meantime I installed the software opengl drivers in order to be able to start linuxcnc in a remote NX session. I will have to do dedicated testing to see what is going on but when using the remote session (normal "administration work", browsing and doing this and that) the jitter is even lower now at around 1800ns

Please Log in or Create an account to join the conversation.

- RGH

- Offline

- Junior Member

-

Less

More

- Posts: 35

- Thank you received: 13

15 Dec 2012 22:31 - 15 Dec 2012 23:01 #27769

by RGH

Replied by RGH on topic Reducing latency on multicore pc's - Success!

Last edit: 15 Dec 2012 23:01 by RGH. Reason: added attachment

The following user(s) said Thank You: tensor

Please Log in or Create an account to join the conversation.

Time to create page: 0.105 seconds