Genserkins failure with custom robot

- Wireline

-

Topic Author

Topic Author

- Offline

- Senior Member

-

Less

More

- Posts: 67

- Thank you received: 9

15 May 2018 10:01 - 15 May 2018 10:02 #110752

by Wireline

Replied by Wireline on topic Genserkins failure with custom robot

Hi Grotius!

Your custom robot interface sounds like a great development. It would be particularly good to have a way of visualising the basic kinematic chain of the bot in the GUI, and not just vismach, to ensure that the calculated joint angles match. As you probably know, with inverse kinematics there are multiple solutions to any positioning problem and the possibility of singularities (gimbal lock), so a way of making sure vismach and the controller agree would be very useful. Getting the 6DOF bots actual spherical work envelope would similarly be great for ensuring that the gcode sits in an accessible area.

I love the idea of using any kind of perception or robot vision tool! My hope is to retrofit a project I did in ROS to the arm - having it identify objects on a table and pick them up using depth cameras and classification networks. Lasers sound particularly good for toolpath following, I would like to try that too.

In terms of a tutorial. I will look into doing one, but its unlikely to be for a while. Is there anything that is a blocker for you at the moment? I am happy to try and help. FWIW I posted an overview of the process here forum.linuxcnc.org/38-general-linuxcnc-q...-model-into-linuxcnc but for vismach it mainly refers to the wikis.

Happy CNC'ing!

Your custom robot interface sounds like a great development. It would be particularly good to have a way of visualising the basic kinematic chain of the bot in the GUI, and not just vismach, to ensure that the calculated joint angles match. As you probably know, with inverse kinematics there are multiple solutions to any positioning problem and the possibility of singularities (gimbal lock), so a way of making sure vismach and the controller agree would be very useful. Getting the 6DOF bots actual spherical work envelope would similarly be great for ensuring that the gcode sits in an accessible area.

I love the idea of using any kind of perception or robot vision tool! My hope is to retrofit a project I did in ROS to the arm - having it identify objects on a table and pick them up using depth cameras and classification networks. Lasers sound particularly good for toolpath following, I would like to try that too.

In terms of a tutorial. I will look into doing one, but its unlikely to be for a while. Is there anything that is a blocker for you at the moment? I am happy to try and help. FWIW I posted an overview of the process here forum.linuxcnc.org/38-general-linuxcnc-q...-model-into-linuxcnc but for vismach it mainly refers to the wikis.

Happy CNC'ing!

Last edit: 15 May 2018 10:02 by Wireline.

The following user(s) said Thank You: Grotius

Please Log in or Create an account to join the conversation.

- andypugh

-

- Offline

- Moderator

-

Less

More

- Posts: 19789

- Thank you received: 4604

15 May 2018 11:03 #110753

by andypugh

Replied by andypugh on topic Genserkins failure with custom robot

It should be possible to write a Vismach model (which is a Python script) that interrogates the INI file DH parameters and adaptively models a matching robot.

I don't have any robots so don't have much of an incentive to be the person who does this

I don't have any robots so don't have much of an incentive to be the person who does this

The following user(s) said Thank You: Grotius

Please Log in or Create an account to join the conversation.

- Grotius

-

- Offline

- Platinum Member

-

Less

More

- Posts: 2419

- Thank you received: 2345

15 May 2018 18:32 - 15 May 2018 19:50 #110772

by Grotius

Replied by Grotius on topic Genserkins failure with custom robot

Hi Wireline,

I will start with the special robot interface screen today. I will show a first example coming time.

This will be based / expanded on the current grotius screen layout, and we will disqus this, improve this and test this.

At the moment great improvement is my github channel. Here you can download and compile your program example when program is ready to test.

Okey, i would by happy if you have time to make a gantry example in vismach. the most simple gantry model that can be loaded into

a basic configuration in minutes, with some guiding text how to do this. If you have tested this, please put the file's in the document secion of linuxcnc and report that it is done. I think for you this is not much work.

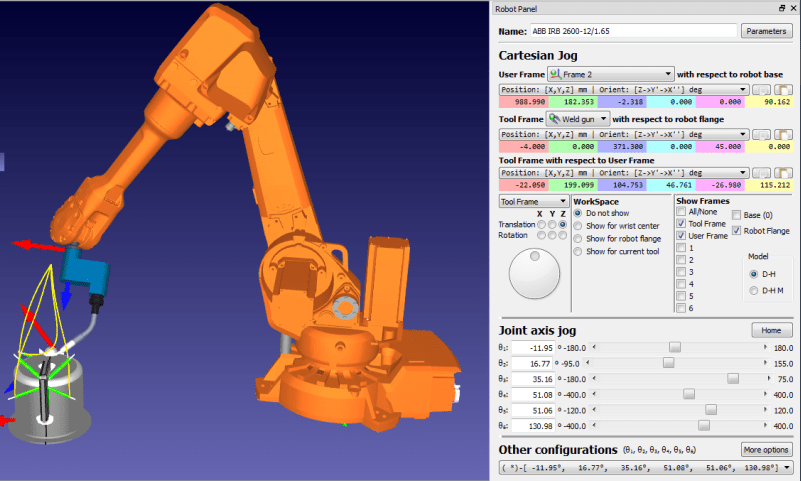

This i found on google :

Here you see a first attemp. I see a diffiulty, but maybe not. Is linuxcnc interpolating the cartasian movement? I guess yes...

Is that based on your ini file robot setup? The joint's with the mm distance between the joints in the ini file? So yes, then it is easy.

I think for most users the home switches are necesery. Because of stepper motors. A few users can use servo's with battery encoders. They don't need the limit switch. But Kuka would be better with automated home switches when robot is out of position.

The kuka tool to calibrate the robot is then not nessecery anymore. So what is your thought's about home sensors?

Think about what you want to see on the screen.

Tcp mode is for moving robot around tool center point. This is very nice fuction. But i don't know linux has that interpolation model at the moment, maybe you know.

Handshake is name to interpolate with external axis. For example when you are welding on rotary axis. I even don't know if linuxcnc has this interpolation model at this time.

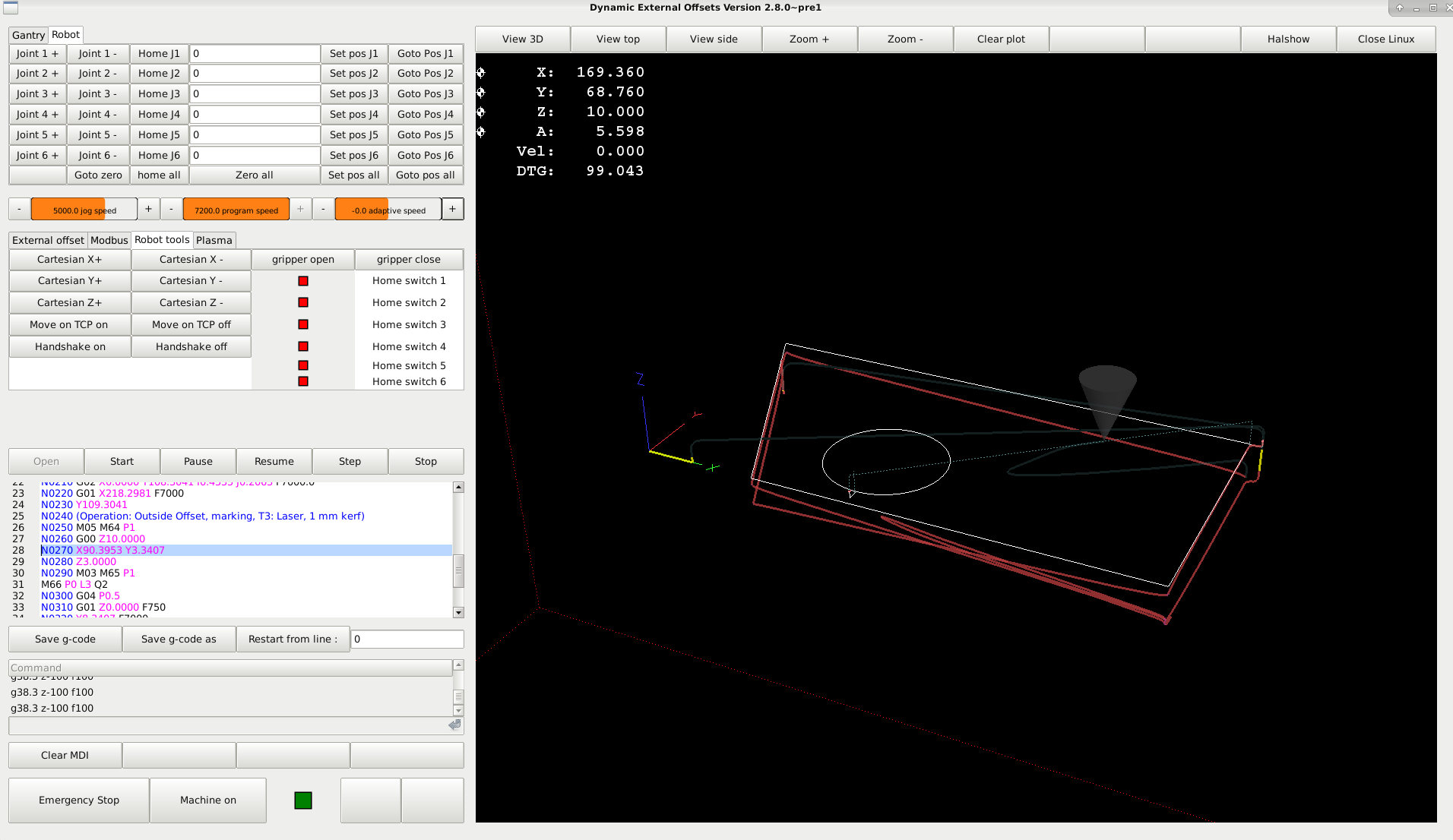

The toolpath you see in red, is playing around with external offsets and negative adaptive speed.

What i want to say about vision systems.

Best is to start with cheap web cam. Mach3 and sheetcam has webcam plugins. I preferr to searc for an example python

web cam code. After you get this, it is easyer to implement into real code. We can do this with gcode parametric input or subroutine bases on variable gcode. I think that is not really a problem. The difficulty is to make the vector and position into gcode output.

If you use 2 camera's you can make calculation of dept'h.

I would say keep on cutting :

I will start with the special robot interface screen today. I will show a first example coming time.

This will be based / expanded on the current grotius screen layout, and we will disqus this, improve this and test this.

At the moment great improvement is my github channel. Here you can download and compile your program example when program is ready to test.

Okey, i would by happy if you have time to make a gantry example in vismach. the most simple gantry model that can be loaded into

a basic configuration in minutes, with some guiding text how to do this. If you have tested this, please put the file's in the document secion of linuxcnc and report that it is done. I think for you this is not much work.

This i found on google :

Here you see a first attemp. I see a diffiulty, but maybe not. Is linuxcnc interpolating the cartasian movement? I guess yes...

Is that based on your ini file robot setup? The joint's with the mm distance between the joints in the ini file? So yes, then it is easy.

I think for most users the home switches are necesery. Because of stepper motors. A few users can use servo's with battery encoders. They don't need the limit switch. But Kuka would be better with automated home switches when robot is out of position.

The kuka tool to calibrate the robot is then not nessecery anymore. So what is your thought's about home sensors?

Think about what you want to see on the screen.

Tcp mode is for moving robot around tool center point. This is very nice fuction. But i don't know linux has that interpolation model at the moment, maybe you know.

Handshake is name to interpolate with external axis. For example when you are welding on rotary axis. I even don't know if linuxcnc has this interpolation model at this time.

The toolpath you see in red, is playing around with external offsets and negative adaptive speed.

What i want to say about vision systems.

Best is to start with cheap web cam. Mach3 and sheetcam has webcam plugins. I preferr to searc for an example python

web cam code. After you get this, it is easyer to implement into real code. We can do this with gcode parametric input or subroutine bases on variable gcode. I think that is not really a problem. The difficulty is to make the vector and position into gcode output.

If you use 2 camera's you can make calculation of dept'h.

I would say keep on cutting :

Last edit: 15 May 2018 19:50 by Grotius.

Please Log in or Create an account to join the conversation.

- andypugh

-

- Offline

- Moderator

-

Less

More

- Posts: 19789

- Thank you received: 4604

15 May 2018 22:02 #110776

by andypugh

Replied by andypugh on topic Genserkins failure with custom robot

LinuxCNC can do tool centre point

There is an already-existing gantry Vismach model in LinuxCNC

sim->axis->vismach->5-axis->bridge mill

That actually does the tool-point-centre motion that you were asking about, once homed.

And there is already a Vismach tutorial here: linuxcnc.org/docs/2.7/html/gui/vismach.html

There is an already-existing gantry Vismach model in LinuxCNC

sim->axis->vismach->5-axis->bridge mill

That actually does the tool-point-centre motion that you were asking about, once homed.

And there is already a Vismach tutorial here: linuxcnc.org/docs/2.7/html/gui/vismach.html

The following user(s) said Thank You: Grotius, Wireline

Please Log in or Create an account to join the conversation.

- Aciera

-

- Offline

- Administrator

-

Less

More

- Posts: 4638

- Thank you received: 2060

27 Jan 2020 09:42 #155886

by Aciera

Replied by Aciera on topic Genserkins failure with custom robot

Wireline,

I'm trying to set up the DH-Parameters for a similar robot.

Could you post the final DH-Parameters of your setup?

I'm trying to set up the DH-Parameters for a similar robot.

Could you post the final DH-Parameters of your setup?

Please Log in or Create an account to join the conversation.

- Aciera

-

- Offline

- Administrator

-

Less

More

- Posts: 4638

- Thank you received: 2060

28 Jan 2020 12:33 #156002

by Aciera

Replied by Aciera on topic Genserkins failure with custom robot

Ok, never mind. I got it working.

Please Log in or Create an account to join the conversation.

Time to create page: 0.094 seconds