ROS LinuxCNC Link

- Bari

-

- Offline

- Platinum Member

-

Less

More

- Posts: 637

- Thank you received: 234

09 May 2021 18:39 #208402

by Bari

Replied by Bari on topic ROS LinuxCNC Link

Nice work! Teach modes for robots are good enough for moving things like a pallet around but fall short for most things with precision movements.

How would you be able to hook into something like machine vision that will create offsets for the "learned" paths? An example might be that a robot moves to the corner of a circuit board where there is a fiducial. The camera looks at the pattern and outputs an offset to center the end of arm tool.

How would you be able to hook into something like machine vision that will create offsets for the "learned" paths? An example might be that a robot moves to the corner of a circuit board where there is a fiducial. The camera looks at the pattern and outputs an offset to center the end of arm tool.

The following user(s) said Thank You: Grotius

Please Log in or Create an account to join the conversation.

- rodw

-

- Offline

- Platinum Member

-

Less

More

- Posts: 11719

- Thank you received: 3967

09 May 2021 20:45 #208413

by rodw

You may be able to use something like this www.intelrealsense.com/lidar-camera-l515/

These return a depth image stream (and infrared and rgb streams ) that gives a distance from the camera to objects at the pixel level

I've been playing with one for dimensioning parcels in keeping with your warehouse theme.... We just got integration with weighing scales integrated last night.

Replied by rodw on topic ROS LinuxCNC Link

Nice work! Teach modes for robots are good enough for moving things like a pallet around but fall short for most things with precision movements.

How would you be able to hook into something like machine vision that will create offsets for the "learned" paths? An example might be that a robot moves to the corner of a circuit board where there is a fiducial. The camera looks at the pattern and outputs an offset to center the end of arm tool.

You may be able to use something like this www.intelrealsense.com/lidar-camera-l515/

These return a depth image stream (and infrared and rgb streams ) that gives a distance from the camera to objects at the pixel level

I've been playing with one for dimensioning parcels in keeping with your warehouse theme.... We just got integration with weighing scales integrated last night.

The following user(s) said Thank You: Grotius

Please Log in or Create an account to join the conversation.

- Bari

-

- Offline

- Platinum Member

-

Less

More

- Posts: 637

- Thank you received: 234

09 May 2021 21:25 #208421

by Bari

Replied by Bari on topic ROS LinuxCNC Link

@rodw the image processing part I have down. I go back to the olden days of industrial television. When we wanted to use a computer to process video we had to wait for fast enough ones to be invented.

I was wondering about how to hook external inputs such as offsets into his robot control idea.

I was wondering about how to hook external inputs such as offsets into his robot control idea.

Please Log in or Create an account to join the conversation.

- Roiki

- Offline

- Premium Member

-

Less

More

- Posts: 116

- Thank you received: 19

10 May 2021 12:40 #208475

by Roiki

Replied by Roiki on topic ROS LinuxCNC Link

You would want to use ros for this. It already has a support for real sense cameras and more so all you need is to figure out what you want to use it with.

As for the robot code, you should build a parser and use something like UR Script as a base so it can work with offline programmers. Using Gcode or straight C isnt a good solution. It's just going ass backwards up a tree.

There is btw an undocumented python api for hal streams. So you can stream hal data to rt components from python programs. Which was a nice find.

As for the robot code, you should build a parser and use something like UR Script as a base so it can work with offline programmers. Using Gcode or straight C isnt a good solution. It's just going ass backwards up a tree.

There is btw an undocumented python api for hal streams. So you can stream hal data to rt components from python programs. Which was a nice find.

Please Log in or Create an account to join the conversation.

- Grotius

-

- Offline

- Platinum Member

-

Less

More

- Posts: 2419

- Thank you received: 2348

10 May 2021 13:10 - 10 May 2021 13:47 #208476

by Grotius

Replied by Grotius on topic ROS LinuxCNC Link

Hi,

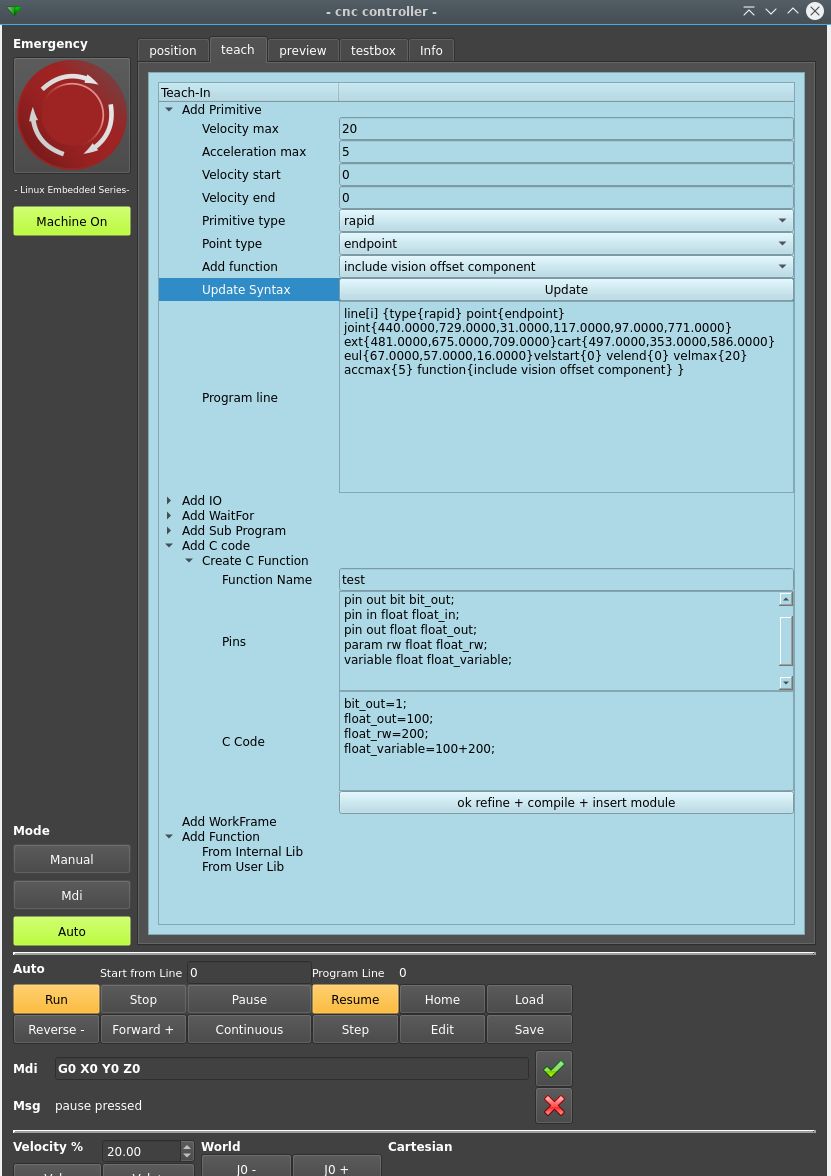

Today i started coding the teach in primitives in the treewidget.

The basic 3d primitives like point, line, arc and spline are ready.

The program line is autogenerated and can be edited by user in the text display window.

For info, the program is producing random value's when running in demo mode.

@Roiki,

C isnt a good solution.

Why is hal written in c? Maybe try to write hal or a kernel module in Python.

Python is for me the most unlogical language ever written.

Using Gcode isnt a good solution

If the program can deal with Gcode you don't have to worry about "how can my robot execute a cadcam processed file?"

@Bari,

You see in the program there is a function loaded in this program line. The function is called "include vision offset component".

This function is active during this executed program line.

The way of creating a function inside this program is new. A function is like calling something like "gripper open".

You see further on the screen a function builder appear's in the treewidget.

Here you can add offset's or whatever logic you want. This function builder is an ultimate control feature.

These created functions have .so extension are direct added to the /rtlib and are added to the "Add function" content combobox.

To use a live vision system, we have to switch the controller to operate in live mode. Normally it would operate in stream mode.

A other option is to process the data of the vision system, and then execute it. Then the machine can stay in stream mode.

It's just going ass backwards up a tree.

@Arciera,

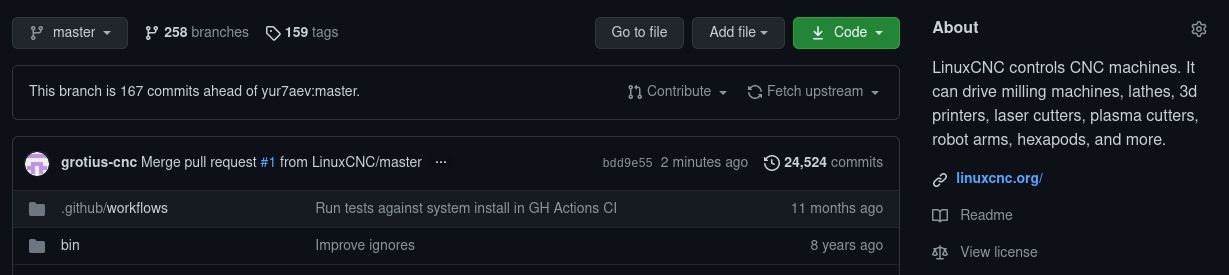

I looked into the dmitrys nyx-branch. It is quite close to master branche to my surprise.

It can be used, no problem.

Today i started coding the teach in primitives in the treewidget.

The basic 3d primitives like point, line, arc and spline are ready.

The program line is autogenerated and can be edited by user in the text display window.

For info, the program is producing random value's when running in demo mode.

@Roiki,

C isnt a good solution.

Why is hal written in c? Maybe try to write hal or a kernel module in Python.

Python is for me the most unlogical language ever written.

Using Gcode isnt a good solution

If the program can deal with Gcode you don't have to worry about "how can my robot execute a cadcam processed file?"

@Bari,

You see in the program there is a function loaded in this program line. The function is called "include vision offset component".

This function is active during this executed program line.

The way of creating a function inside this program is new. A function is like calling something like "gripper open".

You see further on the screen a function builder appear's in the treewidget.

Here you can add offset's or whatever logic you want. This function builder is an ultimate control feature.

These created functions have .so extension are direct added to the /rtlib and are added to the "Add function" content combobox.

To use a live vision system, we have to switch the controller to operate in live mode. Normally it would operate in stream mode.

A other option is to process the data of the vision system, and then execute it. Then the machine can stay in stream mode.

It's just going ass backwards up a tree.

@Arciera,

I looked into the dmitrys nyx-branch. It is quite close to master branche to my surprise.

It can be used, no problem.

Attachments:

Last edit: 10 May 2021 13:47 by Grotius.

The following user(s) said Thank You: Bari, Aciera

Please Log in or Create an account to join the conversation.

- Aciera

-

- Offline

- Administrator

-

Less

More

- Posts: 4653

- Thank you received: 2078

10 May 2021 14:09 #208479

by Aciera

Thanks for looking into it. There has actually been talk of including the driver into master but it hasn't happened yet.

If you can include that in your system then I'll be more than happy to test your application.

How do you think your system will be installed? Built from source or an ISO image?

Replied by Aciera on topic ROS LinuxCNC Link

I looked into the dmitrys nyx-branch. It is quite close to master branche to my surprise.

It can be used, no problem.

Thanks for looking into it. There has actually been talk of including the driver into master but it hasn't happened yet.

If you can include that in your system then I'll be more than happy to test your application.

How do you think your system will be installed? Built from source or an ISO image?

Please Log in or Create an account to join the conversation.

- Roiki

- Offline

- Premium Member

-

Less

More

- Posts: 116

- Thank you received: 19

10 May 2021 16:51 #208487

by Roiki

Replied by Roiki on topic ROS LinuxCNC Link

I mean as a language for the movements that control the robot like gcode, which is an interpreted scripting language. You want something similar and not just direct C code or something. You always want to validate your instructions.

Also gcode is so different for what a robot needs that you´ll need to modify it beyond the rs274 that its no longer really gcode. Most robot controllers can also parse up to 5 axis gcode programs inside their own parsers. But the language has the functionality that robots need.

Also gcode is so different for what a robot needs that you´ll need to modify it beyond the rs274 that its no longer really gcode. Most robot controllers can also parse up to 5 axis gcode programs inside their own parsers. But the language has the functionality that robots need.

Please Log in or Create an account to join the conversation.

- Aciera

-

- Offline

- Administrator

-

Less

More

- Posts: 4653

- Thank you received: 2078

10 May 2021 17:16 - 10 May 2021 17:35 #208489

by Aciera

Replied by Aciera on topic ROS LinuxCNC Link

@Roiki

Actually I'm not aware that there is support for industry style robot programming even in ROS. Last time I had a look at it one had to use c++ or python script to send the commands. But maybe this has changed.

[edit]

In any case I'm not really fussy about the programming language. If I can program my robot using points from a teach-in function in JOINT, XYZABC and TOOL coordinates and can create components that use external IO, subroutines and IF/THEN statements AND have smooth movements using proper kinematics then I'm a pretty happy camper.

Certainly I will want to simulate that code before I run it on the actual hardware. Simulation is pretty much a must with robots.

To be sure, having an industry style language that's compatible with third party software like roboDK using Postprocessors would be VERY useful but surely we can't really expect Grotius to single handedly roll out a product like that in a few weeks.

Actually I'm not aware that there is support for industry style robot programming even in ROS. Last time I had a look at it one had to use c++ or python script to send the commands. But maybe this has changed.

[edit]

In any case I'm not really fussy about the programming language. If I can program my robot using points from a teach-in function in JOINT, XYZABC and TOOL coordinates and can create components that use external IO, subroutines and IF/THEN statements AND have smooth movements using proper kinematics then I'm a pretty happy camper.

Certainly I will want to simulate that code before I run it on the actual hardware. Simulation is pretty much a must with robots.

To be sure, having an industry style language that's compatible with third party software like roboDK using Postprocessors would be VERY useful but surely we can't really expect Grotius to single handedly roll out a product like that in a few weeks.

Last edit: 10 May 2021 17:35 by Aciera.

Please Log in or Create an account to join the conversation.

- Bari

-

- Offline

- Platinum Member

-

Less

More

- Posts: 637

- Thank you received: 234

10 May 2021 17:52 #208492

by Bari

The problem I have with ROS and with most robots is the lack of real time support for things that have to be synchronized in well under 1mS. I need to synchronize motion to mechanisms e.g., printheads, lasers, etc. that operate at a hundred times faster and I can't use interpolation. Try and ask for encoder outputs from inside a robot so that you can keep track of position in real time. I need realer time!

I'm not a fan of Intel and the depth measurement accuracy of their cameras is only 2%. In my circuit board fiducial application XY is of most interest since the PCB's are pretty consistent in thickness. A few microns of variance in Z will cover the worst of applications. I don't need stereo vision for this.

Replied by Bari on topic ROS LinuxCNC Link

You would want to use ros for this. It already has a support for real sense cameras and more so all you need is to figure out what you want to use it with.

The problem I have with ROS and with most robots is the lack of real time support for things that have to be synchronized in well under 1mS. I need to synchronize motion to mechanisms e.g., printheads, lasers, etc. that operate at a hundred times faster and I can't use interpolation. Try and ask for encoder outputs from inside a robot so that you can keep track of position in real time. I need realer time!

I'm not a fan of Intel and the depth measurement accuracy of their cameras is only 2%. In my circuit board fiducial application XY is of most interest since the PCB's are pretty consistent in thickness. A few microns of variance in Z will cover the worst of applications. I don't need stereo vision for this.

Please Log in or Create an account to join the conversation.

- Grotius

-

- Offline

- Platinum Member

-

Less

More

- Posts: 2419

- Thank you received: 2348

10 May 2021 17:58 - 10 May 2021 18:03 #208493

by Grotius

Replied by Grotius on topic ROS LinuxCNC Link

Hi Arciera,

Here is a tiny present for you :

link

Hi Bari,

My program uses 1000 preprocessed frames a second. It's a different approach. It's like playing a video.

Here is a tiny present for you :

link

Hi Bari,

My program uses 1000 preprocessed frames a second. It's a different approach. It's like playing a video.

Attachments:

Last edit: 10 May 2021 18:03 by Grotius.

Please Log in or Create an account to join the conversation.

Time to create page: 0.132 seconds